In 2014, when artificial intelligence (AI) first began to enter the commercial market, we knew the critical link was the human interaction with cognitive machines, but even the most salient proof points at that time were only hypothetical.

Then March 18th, 2018, happened. On that evening in Tempe, Arizona, a self-driving Uber – one with a back-up human driver on board – struck and killed a female pedestrian.

How could that happen? The answer is: very predictably.

Since the accident, we have learned that the back-up driver allegedly was more than a little distracted by, among other things, watching a streaming version of “The Voice” for as much as one-third of the trip before the fatal accident.

It was a predictable outcome for a variety of reasons.

- First, accidents happen, AI or no AI. In 2016, an Ohio man was killed when his Tesla Model S, operating in auto-pilot mode, crashed into a tractor trailer in Florida. Tempe was only a first because the victim hadn’t decided upon the merits of autonomous driving for herself.

- Second, physics are physics. A Volvo XC90, like the one in the crash, weighs in at a little over two tons. In 2016, one reviewer wrote: “Bad news for walls, telephone poles, and other solid objects — the new Volvo XC90 looks like it can crush you into oblivion.” Traveling at a speed north of 40mph, as the Tempe vehicle was, an average-sized vehicle would take approximately 120 feet to stop. The potential for danger will always be there.

- Third, and most important to this narrative, we already knew that the trickiest part of the man-machine partnership is the intersection between the man and the machine itself. For AI to be most effective, we simply can’t ignore human behavior. The hand-offs matter.

In 2014, Google decided to scrap its prior approach to self-driving cars and head in an entirely new direction. After hundreds of thousands of hours of development and highway testing, Google smartly realized that drivers in stand-by mode quickly find themselves bored. When it was our turn to take the wheel, we human drivers might be reading, texting, Googling – or worse – watching mediocre televised singing competitions. The lesson learned was that relying on a driver to play a back-up role practically invited disaster.

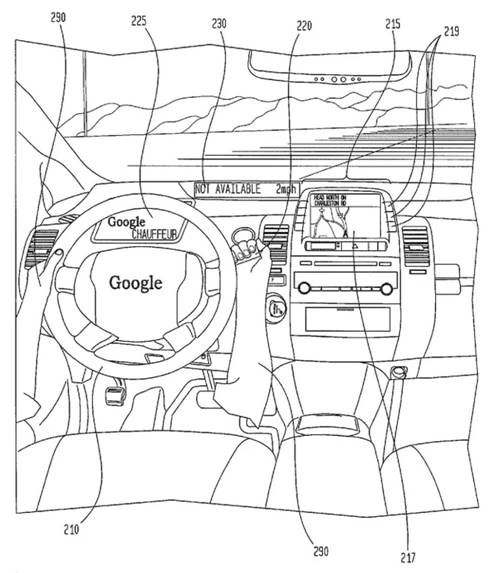

Figure 1: Illustration from Google's Original Patent Application

Subsequently, Google's patent application diagrams (as seen above) and development stripped the car of its steering wheel and accelerator pedals. Using AI, the car would assume 100 percent of the operating responsibilities, save an emergency brake that a human could use if he or she detected a danger that the autonomous car could not.

The lessons for enterprises interested in using AI are these:

- While accidents happen, we need to tirelessly learn from them for AI to work. Fortunately, most applications of AI technologies in corporate settings have less dire consequences than the autonomous car example. Still, inadvertent outcomes, such as misusing personal information, misidentifying patient treatment options or recommending trading based on the wrong cues, can be harmful in their own right. To learn from past “accidents,” enterprises should maintain a center of excellence that can help ensure that successive business units avoid past errors. They should also maintain and continuously update an object library.

- Just as “physics are physics” and certain business process norms are unlikely to change, AI that is properly applied can still lead to superior results. Fully 33 percent of the 120-foot stopping distance for the car traveling 40-mph can be attributed to human reaction time rather than the braking ability of the vehicle. Similarly, much of the process time in a “know your customer” (KYC) process may be waiting for human approval, but the rest can easily be automated for faster client onboarding.

- As the Google reboot demonstrates, practitioners of AI should be open and willing to rethink approaches to its applications. As we do so, we need to think not only about organizational change management, but extra-organizational change, too. It is not only how the human working with the machine needs to be addressed but literally – in this example anyway – the implications for the man or woman on the street that also matter. The point is, we need to consider all of the stakeholders to AI, including those who don’t have an active role in choosing to use the technology.

ISG helps organizations identify, plan and execute on AI strategies while always keeping front and center the critical intersection between users and technology. More than ever, change management is important to getting it right – and helping enterprises gain the maximum benefit from AI.

The tragedy in Tempe aside, most experts believe AI will help reduce accidents over time, not increase them. With our clients, we’ve already experienced it can lead to better businesses outcomes too. Contact us to find out more.