Recently, Google proclaimed that it had achieved quantum supremacy with its “Sycamore” quantum computer that can solve complex algorithms unsolvable by any other computer today. This milestone raises fundamental questions about how quantum computing can be used and how it will affect initiatives in the digital era.

Every day, humans create more than 2.5 exabytes of data, and that number continues to grow, especially with the rise of the internet of things (IoT) and 5G capabilities. Machine learning (ML) and artificial intelligence (AI) are some of the ways to help manage and analyze data for competitive advantage, but continued innovation and the desire for meaningful insights may make data increasingly complex for organizations to collect and analyze.

As classical binary computing reaches its performance limits, quantum computing is becoming one of the fastest-growing digital trends and is predicted to be the solution for the future’s big data challenges. Though quantum computing is still just on the horizon, the U.S. plans to invest more than $1.2 billion toward quantum information over the next 10 years in a race to build the world’s best quantum technology.

What Is Quantum Computing?

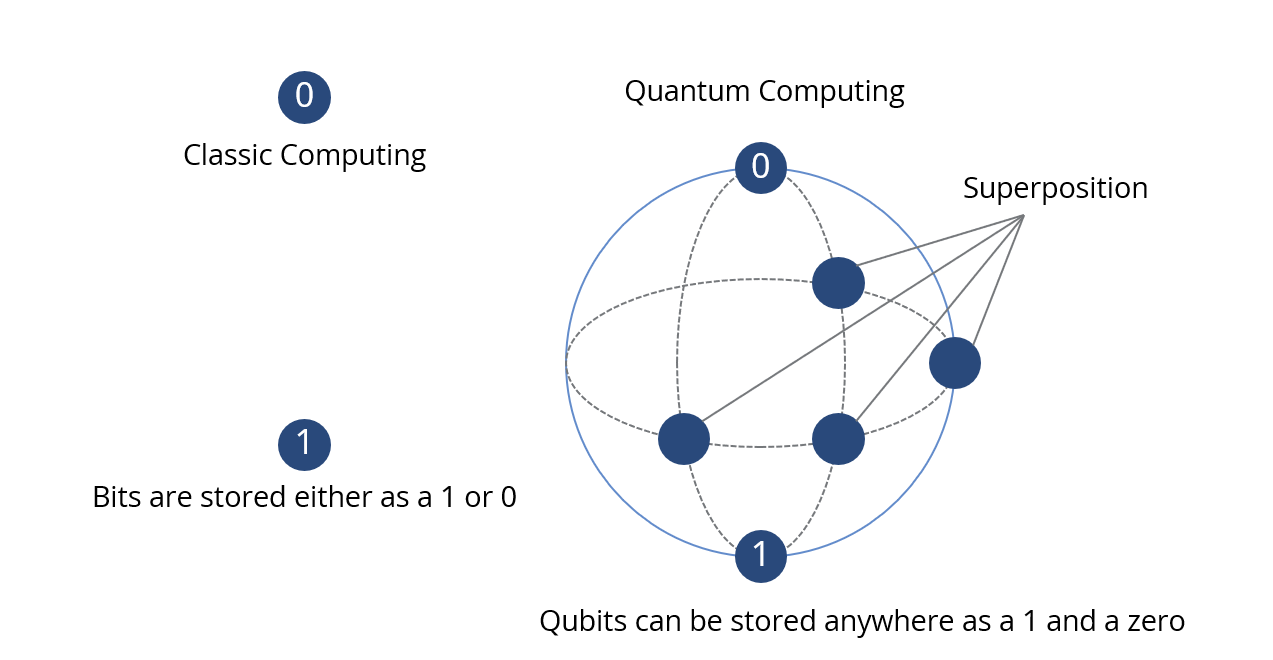

Quantum computing combines quantum physics, computer science and the theory of information – and most experts agree it has the potential to impact the future of digital business and security. How does it differ from classic computing? Traditional computing uses a base-two numerical system that follows set operations and processes and communicates data using bits. Every piece of digital information is stored as a bit in the form of either a one or a zero. A series of bits put together is called a binary code. For example, the letter “A” in classic computing is stored in binary as 01000001. The challenge of classic computing is that it can only run one calculation at a time. So, when there is a large data set to compute, this negatively impacts and decreases its computational power.

Quantum computing is fundamentally different in that it uses what is called quantum bits (qubits), which expand the binary limits by following quantum logic represented as a one or zero of digital data and the logic of superposition in which a qubit is represented not just by one state but by both a one and a zero at the same time. It exists in an unrecognized combination, and once the data is called, it is compiled into one of the definite states as a one or zero. Superposition is what makes qubits more impressive because it decreases the number of operations needed to solve complex problems by being able to run calculations simultaneously at higher speeds and with less power consumption.

Impact on AI and ML

Analyzing massive amounts of data is very complex. In fact, it can be nearly impossible for humans to sift through a large volume of data and find correlated information that has actionable value. Organizations create so much data that large amounts of data go unused. Many are missing out on opportunities that could help gain competitive advantages.

It is with these large data sets that ML can be quite helpful. ML requires a great deal of historical data and constantly needs to be fed with new information, so it can learn how the data changes and identify trends over time. However, as the volume of data increases, the complexity of computation also increases, along with the time needed to analyze, calculate, identify, interpret and provide any relevant output. Machines do not know how to tell a story with data, they simply provide the raw material.

ML algorithms today are limited by the computational power of classical computers. Quantum computing is capable of administering large data sets at much faster speeds and can supply data to AI technologies to analyze data at a more granular level to identify patterns and anomalies. Quantum computing also can help integrate data by running comparisons between schemas to quickly analyze and understand the relationship between two counterparts. To give a bit of perspective, Google’s Sycamore is reported to have solved a problem in 200 seconds that would have taken today’s fastest supercomputer 10,000 years to solve. This opens new possibilities for the future of big data and analytics.

As the world changes its approach to IT and information architecture, its approach to computing and big data must change as well. Organizations are facing different problems today, which means they need different solutions.

ISG stays up to date with the latest digital trends in IT, so we can help enterprises make decisions about big data and analytics along the digital transformation journey. Contact us to discuss how we can help solve your current big data and analytics challenges and put your organization on a path toward a digital future.